In last month’s installment of The Primer, we looked at methods for detecting and classifying the smallest types of genetic variation, SNPs. At the other end of the size scale are duplications or deletions of entire chromosomal regions or even whole chromosomes, which produce what are referred to as copy number variations (CNVs) for affected regions. While some genes have complex regulatory mechanisms which allow them to adjust their expression levels and compensate for CNV, others do not, and for these, changes from the normal number of gene copies can have serious impacts. A classic example of this is trisomy 21 (Down syndrome), which is not generally thought to require an additional extra whole chromosome but may arise any time there is an extra copy of a relatively small region of approximately six million bases (6 Mb) in length. (The exact definition of minimal repeat region in Down syndrome is still a matter of study, but this number serves for illustrative purposes.)

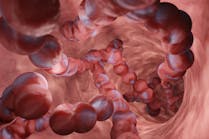

Historically, large enough regions of chromosomal duplication or deletion have been detected by microscopic examination of metaphase chromosome preparations with classical cytogenetics techniques. The advent of molecular technologies has given the modern cytogeneticist additional, higher-resolution tools to work with for the detection of CNVs. One particularly useful (and elegant) method is array comparative genome hybridization (array CGH, or aCGH), our focus this month (Figure 1).

| Figure 1. Array CGH protocol. Source: Emmanuel Barillot, Laurence Calzone, Philippe Hupé, Jean-Philippe Vert, Andrei Zinovyev. Computational Systems Biology of Cancer. Chapman & Hall/CRC Mathematical & Computational Biology. 2012 |

Key concepts

Three technical concepts lie behind the performance of array CGH. First among these is random-primed, fluorescent labelling of a total genomic DNA sample. Imagine taking a cellular DNA sample and mixing it with a large number of “random hexamers” (six-base long synthetic DNA oligonucleotides, in a mixture of 4096 [i.e., 46] sequences in approximately equal amounts of each possible sequence combination). Add in nucleoside triphosphates (dNTPs), where one (or more commonly, a percentage of one) carries a fluorescent label, and a DNA polymerase. Now, heat denature the sample to force the genomic DNA strands to separate, and cool quickly to low temperature; this will allow the random hexamers to anneal down “at random” all over the genome and provide a starting point for polymerase activity to extend new, fluorescent-nucleotide-incorporating, single-copy strands complementary to genome.

This population of new, fluorescently labelled DNA strands together represents a set of relatively short “probes” which effectively cover most of the genome. Since they originate in a “random” fashion, genome template sequences, which occur more frequently than others, yield more labelled probe sequences than the less common template sequences. The reader may at this point raise a concern that there must be intrinsic bias to this process, as not all hexamers will be at exactly the same concentration, nor will all template sequences replicate with equal efficiency due to intrinsic effects from secondary structures, GC content variation, and the like. While this is absolutely correct, the reader is advised to hold this concern for now as the elegance of the technique will emerge in how this effect is negated.

A second key concept is that of a defined “reference genome” with all genetic regions at their proper, “normal” copy number. While the concept of a reference genome as a hypothetical abstract is neither complex nor foreign to the reader, array CGH requires that this be an actual tangible material, and that it be used in the experimental procedure. Fortunately, only “normal” copy number of all genetic regions, and not the presence or absence of particular point mutations such as SNPs, is required of this reference material. As such, any of a number of well characterized cell lines can serve as this reference genome source.

The third key concept is that of a whole genome array. While not actually the whole genome, such an array consists of hundreds of thousands of position-indexed, single-strand DNA sequences complementary to regularly spaced sections of the human genome. Thus, a hypothetical array of 125,000 distinct spots would ideally consist of one sequence every 24 kb (39 base pairs in the human haploid genome, divided by 125,000) while one of only 30,000 spots would represent ideal spacing of one probe sequence per 100 kb. Our array is printed on solid substrate such as a glass slide and paired to a hybridization/washing system and a fluorescent optical reader capable of resolving the individual spots.

Paired reactions: the red and the green

To perform the actual experiment, two paired reactions are set up—one with sample DNA, and one with reference DNA. The amount of total bulk DNA between the two samples should be the same, and is determined and equalized by direct measurement (such as A260 spectroscopic absorbance, or related methods) and dilution until reaction input values in ng are the same. The reactions are then set up nearly identically, with the key difference being that different fluorophore labels are used in the two reactions. Normally, one reaction uses a red fluorophore and the other a green, which we’ll presume for our example; however, any two spectrally resolvable fluorophores could be utilized. Let’s say for sake of argument that in our case, we’ll use the red fluorophore nucleotide for the reference material reaction, and the green fluorophore nucleotide in the sample material reaction.

After the reactions, we now have two pools of randomly primed, fluorescently labelled DNA products; the red ones represent the “whole” reference genome, and the green ones represent the “whole” sample genome. I place “whole” in quotation marks because, as mentioned above, this isn’t truly unbiased; however, note that where bias arises from intrinsic sequence features which inhibit replication, or from not-quite-perfect equality of the amount or priming efficiency of each possible random hexamer, these effects should occur equally in reference and test samples. Thus, regardless of whether a genetic region is well or poorly represented in general, differences in the amount of its representation between our two samples should arise only from differences in the amount of template. A region occurring twice (once per haploid genome) in our reference sample but only once in our test sample should generate only about half the number of green labelled probes from the region as the number of red labelled probes from the same region. Conversely, if the region occurs three (or ten) times in the test sample, there should be roughly three (or ten) times as many green labelled probes as red labelled probes for that region.

To determine our results, all we need to do is mix our two products, and hybridize them to the array. Recall that each spot printed on the array is actually thousands of identical copies of the same sequence, and it should become clear that we are in effect setting up a competition for hybridization to each spot by red labelled (reference) and green labelled (test) derived products. If there are approximately equal amounts of red and green products that cover the region of a specific array probe, then the array spot will hybridize roughly equal numbers of the two and will appear “yellow” when scanned, as the aggregate mixture of the two colors. If, however, there is a preponderance of red probes covering the array spot’s genomic complement (that is, the test sample has a deletion of this region), the spot will appear reddish; and if there is an excess of green probes for an array spot (the region is duplicated in the test sample), the array spot will appear greenish.

In effect, we directly observe the ratio of green to red, thus “dividing out” any common, region-specific biases which occur in both samples, as discussed above. The whole array is scanned after hybridization and washing, and spots with greenish or reddish net appearance are identified. The array location is in turn related to the genomic position it represents (i.e., what chromosome and region, to the resolution of the array), and optical analysis of the relative green and red intensities can indicate the level of copy number variation in test sample relative to control. All of this can be done in an automated fashion by software.

aCGH: strengths and limitations

The method is a powerful approach to detecting CNV in samples, with a size resolution much finer than that possible in classical cytogenetics or fluorescent in situ hybridization (FISH). In theory, the size resolution is set by the size of the array (number of elements, and thus their average spacing on the genome) but in any case is much finer than sizes of CNV anomalies visible by metaphase staining. In addition, the method provides numeric support for degree of CNV (that is, how much a sequence is over- or underrepresented) and is amenable to at least partial automation with higher throughput and less complex sample preparation than classical cytogenetics. It is therefore not surprising that this is becoming a widely used tool in the modern cytogenetics laboratory.

The reader should not, however, expect to see array CGH replacing traditional cytogenetics, but rather acting alongside older methods. An important reason for this lies in what array CGH cannot see: balanced translocations. These genetic rearrangements where non-homologous chromosomes swap regions lie at the basis of a number of important medical conditions (such as Burkitt’s lymphoma, which can arise from reciprocal translocation between chromosomes 8 and 14), but, as there is no change in the net cellular copy number of any genomic region, such cytologic variations appear normal in an array CGH experiment. Classical cytogenetics or FISH with probes from the suspected translocation partners remains the primary tool for detecting such cases. Today’s molecular cytogenetics lab thus often uses multiple older and newer techniques together to gain a better understanding of the full genomic organization picture of a sample.

One other note about this technique; despite the cleverness of effectively using signal ratioing between reference and test materials to mask sequence-specific biasing effects, there remains some potential for misleading results from array CGH. In some cases, results from array CGH are further evaluated by use of quantitative real-time PCR (qPCR) against the potential CNV region as compared to some internal control regions of the same sample to provide additional confirmation.

Finally, the reader may wonder about whether next generation sequencing (NGS) methods may not supplant array CGH. NGS data can indeed be queried bioinformatically as to number of “reads” for any genomic region, and CNV can be detected through over- or under-abundance of reads for one region relative to the rest of the sample genome. For now, however, this is neither as fast nor as cost-effective as array CGH.