How data analytics improves operational performance at clinical labs

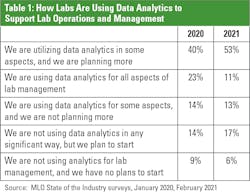

Labs are using increasingly sophisticated approaches to data analytics to improve their operations.

Most labs use what is known as descriptive analytics, which involves interpreting what has already happened. Examples include: the average lab test turnaround time yesterday or total units of red blood cells used last week.

According to Medical Laboratory Observer’s 2021 State of the Industry survey, 77% of labs are now using analytics.1 The same survey found that many labs are monitoring the following key performance indicators (KPIs): test turnaround time, cost per test, billable versus performed tests, staff productivity goals, medical necessity, and unnecessary tests.

“Labs are data factories, and analytics is the tool to understand what all this data is telling us. Not only does analytics shine a brighter light on the available data, but it integrates disparate data and reveals new information that leads to better insight and decision-making. All of this affects (return on investment) ROI,” says Maarten van As, New Markets Development Leader at LabVantage Solutions.

More sophisticated uses of descriptive analytics can involve the use of data sources that are updated more frequently than monthly, although instantaneous data availability is rare at labs, according to Winsten, who adds that timely access to data is a critical for labs that want to improve their operational performance.

Another issue with the approach to data analytics at many labs is the use of manual steps. “Today, many labs use manual or partially manual methods to identify and track key lab metrics. In addition to evaluating the traditional ROI metrics like improved turnaround time and other operational parameters, it’s important for labs to consider the opportunity cost of manual analytics processes,” says Ryan Stephens, Group Marketing Manager for Automation and Core Lab IT at Roche Diagnostics.Leading indicators

At Henry Ford Health System, the Department of Pathology and Laboratory Medicine is tapping into real-time data, allowing it to monitor work in progess and then intervene to improve performance – rather than analyzing past performance to improve future performance.

“Right now, we are trying to find all the leading indicators of value that we can,” Tuthill explains. “We know leading indicators are what create the information that you’re going to see in tomorrow’s data.”

One example is how the lab tracks samples from patients in the emergency room – where the goal is to report test results for 90% of those samples within 45 minutes.

To help achieve this performance metric, the lab monitors the progress of those samples in real time and displays the information on a large electronic screen mounted on the wall in the core lab. “If Mr. Jones’ test has not been resulted at fifty minutes, he moves to the top of the screen and has a red coloration in his row. And we know that Mr. Jones’ testing was delayed. If we look at the monitor, and there are seventeen rows, and they’re all red, we know that we’ve got a little problem going on. What’s the problem? Well, who knows? Maybe the line is jammed, or maybe the instrument went down,” Tuthill explains.

There are seven of these boards hanging on walls in the lab, and they each display different information. In addition to the emergency department, some boards track orders for outreach physicians or the surgery department, while another board tracks the movement of couriers picking up specimens.

Tracking COVID-19 testing

Edward-Elmhurst Health, based in Warrenville, IL, sometimes uses what it calls near real-time data in analytics. For example, it refreshes data as often as hourly to monitor COVID-19 testing.

The data comes in through a dashboard, with details about whether the test is pre-procedural or not; type of test, such as high-throughput or rapid test; turnaround times; and average turnaround time over the last seven days. Most of the data is updated daily. However, the lab also tracks tests as they are ordered in the electronic health record (EHR), and this information is refreshed as frequently as hourly.

The ability to monitor COVID-19 test orders as physicians place them has been particularly useful since September, when lab staff began collecting samples at eleven locations. Before then, the health system collected all samples for patients with symptoms (unless they came through the emergency department) at a single, outdoor location.

“It helps us predict what kind of volume we’re going to have, and that was extremely helpful when we had our surge from the middle of October until the end of December,” Cluver says. “We could adjust our staffing, not only to perform the testing, but to do the collections as well.”

Now that testing demand is more predictable, Cluver says lab managers are no longer monitoring the information hourly; they are reviewing it daily.

About one-third of the lab’s COVID-19 tests are rapid tests done at the health system’s clinics, and two-thirds are PCR tests done in the lab, primarily done on high-throughput analyzers.

Enabling performance improvement

Another area in which labs use descriptive data analytics is in conjunction with quality improvement projects.

For example, Northwell Health measured the volume of blood in vials collected for blood cultures using an automated system across ten hospitals for four months to obtain baseline levels. The system repeated the measurements after it completed a 36-month educational program with providers to improve fill rates.

Incorrect culture results lead to overuse of antibiotics, exasperating antimicrobial resistance, or to underuse of antibiotics, leading to poor health outcomes.

Reimbursement also can be impacted. An incorrect fill rate, “quite frankly, could cause a patient coming through our ED to have a negative culture result. Then we find out that, in fact, the patient did have something going on, and now we have a hospital acquired infection, which will impact our reimbursement,” says Castagnaro, referring to the fact that the Centers for Medicare & Medicaid Services (CMS) will not reimburse providers for the costs associated with treating some hospital-acquired infections.

For adult patients at Northwell, staff fills two vials, with an ideal fill volume of 8-10 mL per bottle, he said.

Before the improvement project, the average fill volume was 2.3 mL. After the project, the health system’s average fill rate increased to 8.6 mL. The sample positivity rate for pathogens increased from 7.39% to 8.85%. The contamination rate did not change.2

Castagnaro says the health system continues to collect data on blood vial fill rates. “We haven’t stopped because it’s an important metric,” he says.

Advanced analytics

Despite the utility of descriptive analytics, experts say that predictive analytics and prescriptive analytics allow for even greater operational improvement, although they are not widely used in clinical laboratories. Predictive analytics involves assessing the likelihood of a future event based on a set of facts, while prescriptive analytics takes that a step further by suggesting actions.

“The level of sophistication jumps up significantly from each of these levels,” Winsten says.

“Even though there’s a lot of great of artificial intelligence out there, it’s not widely disseminated yet.”

Bickley says examples of predictive analytics in which lab data is critical include models that assess the risk of septicemia and acute kidney injury. “Labs can play a vital role in improving immediate care and patient outcomes with access to data. There are many protocols that can be automated with laboratory analytics,” he says.

References

- Nadeau K. Lab administrators prioritize accurate and timely financial and operational performance. Medical Laboratory Observer. January 21, 2021. https://www.mlo-online.com/information-technology/analytics/article/21206709/lab-administrators-prioritize-accurate-and-timely-financial-and-operational-performance. Accessed May 1, 2021.

- Khare R, Kothari T, Castagnaro, et. al. Active monitoring and feedback to improve blood culture fill volumes and positivity across a large integrated health system. Clin Infect Dis. 2020; 70(2): 262–268. https://doi.org/10.1093/cid/ciz198.