Artificial intelligence in healthcare…Friend or foe?

To take the March CE test online go HERE. For more information, visit the Continuing Education tab.

LEARNING OBJECTIVES

Upon completion of this article, the reader will be able to:

1. Summarize the foundation of AI and its presence in healthcare.

2. Differentiate the computer science tests that have proven AI to be valuable, and define the factors that make up the elements of AI.

3. Identify examples of how AI has been used in the field of healthcare.

4. Discuss reasons for failures of AI in healthcare settings.

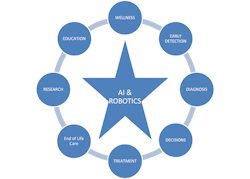

In the last several decades, the advancement in computer technology has created numerous opportunities for collecting vast amounts of information, or ‘big data.’ Management of such and the ability to maximize its practical use sets the stage for artificial intelligence (AI). This technology has been used in banking, marketing, manufacturing, entertainment, art, traffic control, and even agriculture.1 In the last decade or so, AI and robotics have been incorporated into various healthcare practices, from keeping people healthy to early warning of potential diseases. Figure 1 shows the impact of AI and robotics on some key areas of healthcare. The influence of AI has grown as seen with personal assistant apps that have been developed to help patients with drug warnings, patient education material, and monitoring of a patient’s health condition. AI-driven virtual human avatars have been created and used to converse with patients with mental problems in obtaining a diagnosis and treatment, an activity that was prevalent during the isolation days of the COVID-19 pandemic.2

In the last fifty years, laboratory science has seen significant changes in the application of new technologies and methodologies. As new testing modalities were developed and implemented, testing volumes for most clinical laboratories increased. Again, this has been especially true as evidenced during the COVID-19 pandemic with increased workloads, staffing shortages, staff burnout, and supply chain issues. From the days of manual pipetting to sophisticated automated track systems, the need to manage the ever-changing laboratory environment has inspired innovative laboratories to consider the use of artificial intelligence–based systems.

Artificial intelligence

In 1950, the British mathematician and computer scientist, Alan Turing, proposed a thought experiment that suggested machines (i.e., computers) could potentially mimic functions similar to how the human brain collected and assembled information to solve problems and make key decisions. Thus, the Turing Test (TT), a method of inquiry to see if a third-party judge could differentiate between human responses compared to that of a machine was introduced. If a third-party judge could not determine if the majority of correct responses were from a machine or the human respondent, then it passed the Turing Test, thus the machine was considered to possess artificial intelligence. Today, with improved acumen in computer science and access to cloud computing, the TT reveals some limitations. Based on this, the Lovelace Test 2.0 became another tool for determining the presence of intelligence. Ada Lovelace (1815-1852), considered the first computer programmer, believed that to have true machine intelligence it had to demonstrate creativity (create art, poems, stories, engineering, science, etc.). Thus, this was a test to determine a machine’s ability to be creative by producing something that the programmer could not explain.

Computer technology would not reach the level of providing the needed computing power and storage capabilities to support data acquisition and the running of complex programs for decades, yet the concept of artificial intelligence (AI) was born. Today, a number of AI platforms are available such as Amazon’s Alexa, Google’s Med-PaLM, ChatGPT-4, Bard, Bert, Dall-E, and Watson to name a few. Thus, as computer technology improved, the capability to collect data, store it, and process it at phenomenal speeds was in part due to cloud computing, the use of a network of remote servers that could manage and process data externally thus freeing up internal computer resources. This set the stage for the presence of big data sets, the key ingredient for AI technology.1 The introduction of AI and robotics has already had a profound impact on our daily lives and has been suggested as the beginning of the Fourth Industrial Revolution.

What is artificial intelligence?

To better understand and appreciate the significance of AI and its potential impact in the near future, some basic information about what AI is and how it works can be useful. The purpose of AI is to make decisions based on historical or real-time data. From these outcomes, it refines and improves the information output making changes as needed consisting of several subsets: neural networks, deep learning, and machine learning. In developing AI programs, algorithms are created using large databases to provide a set of instructions followed in a specific order that ultimately drives the decision-making process.

Neural networks: Contain specific amounts of information or data. Layers of nodes are created and then adapt to learning from the outcomes. In combining computer science and statistics, the results lead to continuous improvement in solving complex issues and become the basis for algorithms. This is similar to the human brain where neurons interconnect with other neurons to receive (input layer), process (hidden layer), and transmit (output layer) information. Neural networks learn the rules by finding patterns in large data sets that are available.3,4

Machine learning: Occurs when computers collect billions of bits of data that are then used in creating statistical models in building algorithms. Because of the vast amounts of data used in AI, much is broken down into smaller, more manageable units called tokens. Tokens are words, characters, numbers, or symbols that then can be converted to numerical units and applied in developing algorithms efficiently. This allows the computer to learn and adapt, a process that was modeled after the human brain.5 Using statistical methods, algorithms are developed and ‘trained’ to classify or predict outcomes based on relevant data mining information and past outcomes.6 Machine learning does not involve explicit programming to reach a conclusion but recognizes patterns to make a prediction.

Deep learning: Is a subset of machine learning. It consists of a number of neural networks with many hidden layers of unstructured data that identify patterns based on images, texts, sounds, and time inputs. Deep learning provides the logical structure needed for analyzing complex data (obtained from neural networks) that allow for drawing certain conclusions and again, is analogous to how a human brain would work.6 Deep learning is the basis for digital assistants, voice-enabled TV remotes, credit card fraud detection programs, and self-driving cars. It is a type of AI that generates answers or decisions based on past experiences rather than predetermined calculations/algorithms.7

Generative AI: May be considered the next phase in AI. In collecting raw data sets from users' queries (deep learning), certain patterns are identified to create new text, video, and images. ChatGPT is an example and has been used in healthcare as a tool for some basic administrative tasks such as billing, post-appointment clinical notes, and communicating with patients. Such communications with patients are then entered directly into a patient’s electronic health record (EHR). It has been reported that patients were more willing to share information and preferred using the chatbot rather than talking with a healthcare professional.8

Impact of AI on healthcare and the laboratory

A few decades ago, laboratory test results were provided in hand-written reports that had to be manually delivered to each nursing unit or mailed to a healthcare provider. As digital technology improved, the laboratory information system (LIS) became a critical part of laboratory management, not only improving the quality and timeliness of delivering patient reports but also improving the overall efficiency and quality of patient care. Besides managing patient test results, the LIS has become a resource for managing internal and external quality control/assessments, test requests, blood collection, turnaround times, delta-checks, instrument management, and other quality management information, collectively amassing large amounts of data. Artificial intelligence has taken the beginning steps in managing this process.9

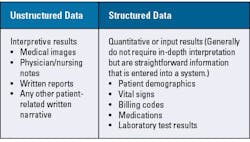

In recent years, personalized medicine, the practice of using genetic and other biodata to make healthcare decisions unique to an individual, has been evolving. To achieve this, significant computing power is needed to review medical literature; analyze patient test results; and provide the best options in diagnosing, treating, and managing that individual patient. Artificial intelligence can play a big role in that. The use of this type of precision medicine allows for patients to be accurately profiled based on personal examinations and laboratory tests. These data sets may be viewed as structured data or as unstructured data (See Table 1). It has been estimated that healthcare providers generate 137 terabytes daily or about 50 petabytes every year, most of which is considered unstructured. Big healthcare data sets are continually growing in part due to ever-evolving medical device technology, genetic testing, and patient-generated health data (point-of-care testing, wearable devices, etc.). A single chest X-ray is about 15 megabytes (Mb) in size and a 3-D mammogram is about 300 Mb, but a digital pathology file consumes as much as 3 gigabytes. The ability to collate this kind of useful patient information in a practical and efficient manner is a part that AI is expected to play.10

As noted, data management in healthcare is essential. It has been estimated that about 30% of the world’s data is related to healthcare or roughly 270 Gb of healthcare data for each person in the world.11 It is anticipated that these databases will continue to grow, thus the need for efficient data management that can parse out critical bits of healthcare information to providers and patients. Critical to the process is to ensure data privacy and that AI is ethically and responsibly used in whatever aspect it is applied to.

In the clinical laboratory, numerous advances in the automation of diagnostic testing have improved accuracy, processing, and data collection, but also have provided a platform for new testing modalities. In addition, data-collecting devices, such as point-of-care testing, smartwatches, Wi-Fi–enabled arm bands, or other real-time biometrics have been applied in looking at the prevention, diagnosis, treatment, and management of patient issues. In one study of 500,000 people using such AI-supported technology, readmission rates and emergency department visits were significantly reduced. This also resulted in fewer follow-up home visits and better patient adherence rates to treatment plans. Cost savings, as seen at Grady Hospital in Atlanta, saw a $4 million decrease in readmission rate costs over two years.2

In Pennsylvania, AI algorithms were trained using data outcomes from 1.25 million surgical patients and used in identifying post-surgical, high-risk patients such as predicting the likelihood of developing sepsis or respiratory problems. It also predicted the need for massive blood transfusions. The ability to prepare multiple units of blood before surgery minimizes the wait time for the blood bank to process many blood units/products needed. It also requires fewer technical staff to prepare the units and ensures typed-match products are available.12

In addition, some wearable devices have been used in predicting healthcare issues based on changes seen in some basic laboratory tests such as hematocrit, hemoglobin, glucose, and platelets, thus signaling a potential underlying health issue.13 In managing a patient’s electronic health record (EHR), AI programs can find and present any abnormal laboratory test and contrast it with other patient data. For example, a low platelet count would certainly be noted, however, AI could explore a patient’s medication list and promptly notify the healthcare practitioner of possible drug-induced thrombocytopenia, a process all done in the background.14

Much of this information is then relayed to databases that with the aid of an appropriate algorithm, can detect, monitor, and manage patient care activities. AI can serve as a tool in support of clinical decision-making as seen with ‘Watson for Oncology,’ an application from IBM that can help to better diagnose and classify treatments for various cancers such as breast, colon, rectal, prostate, gastric, lung, ovarian, and cervical cancers. By entering cancer patient information, a treatment plan can be devised based on data extracted and collated from past clinical cases, 290 medical journals, 200 textbooks, and 12 million pages of medical research.2

Another area AI has been used is the diagnosing of rare diseases. There are as many as 8,000 known rare diseases affecting as many as 400 million people around the world and often requiring up to five years to accurately diagnose. While many medical professionals are not specifically trained or even know of these diseases, AI has been shown as a resource tool for identifying them based on symptomatic patterns and associations that would otherwise not be detected by a human. It also can forecast disease progression and offer targeted treatment regimens.15

An AI-based tool developed by Harvard Medical School has been used in the genomic profiling of brain tumors (gliomas). Similar to performing a frozen section during surgery, the Cryosection Histopathology Assessment and Review Machine (CHARM) can detect genetic mutations, differentiate between the types of glioma, provide associated treatment plans, and offer a prognosis in real time.16 Over 12 million mammograms are done each year in the United States of which some are erroneously identified as cancer positive. AI-assisted programs have been used to read and translate these images 30 times faster than reading these images manually and with 99% accuracy.17 In another example, an AI-enabled eye disease diagnosis system provided the stage of the disease in 30 seconds with a 95% accuracy rate. It has been reported that 60% of all medical errors are due to a wrong initial diagnosis resulting in an estimated 40,000 to 80,000 deaths each year in the United States. The intent of using AI in medicine is to provide healthcare professionals with a resource that will ultimately improve patient care with no intent of replacing them.2

As AI databases are developed and expanded, algorithms can and have been created that have improved the precision and speed of predicting various maladies. As an example, AI has been used in predicting the diagnosis of iron-deficiency anemia (with low serum iron levels) based on routine CBC results. AI has been used in analyzing digital images of urine sediment, microbiology plate reading, and predicting the prognosis for patients with 14 different cancer types by analyzing digital histology sections and genomic data. It also can help in identifying what laboratory tests should be ordered, thus reducing the number (up to 25% of orders) of unnecessary repeat laboratory tests.18,19,20

Robotics and drones

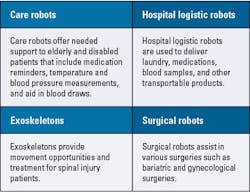

Robotics and drones have had a long history with the military. Medical uses of AI-driven robots and drones are now used in hospitals and other healthcare facilities around the world. Surgical assistance from robots such as the da Vinci robot provides better precision, dexterity, and control in surgery. The da Vinci system has been used in cardiac, urologic (prostate cancer), gynecologic (hysterectomies), pediatric, and general surgeries requiring minimal surgical incisions that improve patient recovery, with less blood loss, less pain, and less scarring.21 Procedures in robotic manipulation have been formalized with structured training (Master of Science in Robotic Surgery) available for physicians offering state-of-the-art techniques.22

There is a greater presence of mobile robots in hospitals involving more timely delivery of medications, supplies, and laboratory specimens. This alleviates hiring staff specifically for transport services, which subsequently increases labor costs. In many cases, nurses, aides, or clerical staff are used for transport — personnel that is in short supply and has other pressing duties. The implementation of automated mobile robots also reduced the need for hard-to-supply PPE (personal protective equipment) and minimized staff exposure to patients in isolation. Table 2 identifies the four major types of robots used in healthcare.

In the past few decades, the clinical laboratory has expanded its footprint beyond the hospital walls. A tremendous amount of time and effort has been spent on specimen collection and delivery to the laboratory. While still in the early stages, unmanned aerial vehicles (UAVs or drones) have been successfully used by a number of laboratories to transport specimens, provide phlebotomy supplies, and move needed blood products quickly and safely. The use of drones is particularly efficient in busy, high-traffic metropolitan areas as well as difficult-to-reach rural areas that are at a distance from a laboratory. Drones have been employed in underserved areas in many countries, particularly during medical emergencies and disasters.23

AI issues to consider

While AI and robotics have many positive aspects, it is still a work in progress. One issue that has raised concern is that of trust in the technology and safety of those involved. In these still early days of AI development, some inherent concerns have been identified and are being attended to.

AI-related problems are likely due to the high dependence on how AI algorithms are ‘taught,’ meaning that misleading, inaccurate, or biased data input will result in incorrect outcomes with limited or bad data input. Further, issues of ethics, transparency, copyright problems, bias, plagiarism, and other legal matters have been voiced.3,24 In addition, there is some fear that certain jobs may be replaced by AI. However, it is believed that new career opportunities will evolve in creating and supporting AI functions. While it has been estimated that AI would automate the workload for 60%-70% of workers performing various manual activities, to date, the impact of AI on the labor market is minimal at best, and focused on unique parts of the economy.25 Regardless, even with AI involvement, human intervention will still be required.2,26 These types of AI concerns about when things go wrong have led to the creation of the Artificial Intelligence Incident Database (https://incidentdatabase.ai), a growing repository of AI-related failures that have been reported as causing “harms or near harms.”27 As humans learn from their mistakes, AI, too, will learn.

Conclusion

Yet, as with any growing technology, early results may not always be perfect, but as technology improves, it is certain that AI will play a bigger role in healthcare and other professions with billions of dollars invested in its development. As for the laboratory, there appears to be a number of areas that would improve efficiency and the quality of services with AI guidance. Critical to the development of AI is the assurance that the ‘learning data’ used in developing an AI algorithm is complete and unbiased. As the adage goes, garbage in, garbage out will result in poor and/or inaccurate outcomes. Yet, AI continues to learn, grow, and be implemented in many areas of everyday life including healthcare and the laboratory.

While still in the early stages, AI has been incorporated into some areas of the laboratory such as genetic testing for a number of syndromes, cancers, various strains of bacteria, and digital pathology. As has been the case with laboratory instrumentation integrating computer technology several decades ago, implementing AI into laboratory practices by predicting test values, offering better test result interpretations, providing sophisticated instrumentation management, and generating diagnostic evaluations are in the works. In addition to the technical considerations, AI could improve laboratory management such as quality assurance practices, distribution of test results, and better defining of true reference values that would minimize patient bias.18 AI, though not perfect at this junction, will drive many aspects of everyday life, especially healthcare. As AI continues to improve, grow, and impact healthcare, it is critical to ensure that it does not overwhelm and remove the human element in interpersonal transactions and that the end results generated by AI are productive and not destructive.

References

1. SITNFlash. The history of artificial intelligence. Science in the News. Published August 28, 2017. Accessed January 24, 2024. https://sitn.hms.harvard.edu/flash/2017/history-artificial-intelligence/.

2. Lee D, Yoon SN. Application of artificial intelligence-based technologies in the healthcare industry: Opportunities and challenges. Int J Environ Res Public Health. 2021;18(1):271. doi:10.3390/ijerph18010271.

3. Lawton G. What is generative AI? Everything you need to know. Enterprise AI. Published January 18, 2024. Accessed January 24, 2024. https://www.techtarget.com/searchenterpriseai/definition/generative-AI.

4. Pure Storage. Deep learning vs. Neural networks. Pure Storage Blog. Published October 26, 2022. Accessed January 24, 2024. https://blog.purestorage.com/purely-informational/deep-learning-vs-neural-networks/.

5. What is machine learning? Ibm.com. Accessed January 24, 2024. https://www.ibm.com/topics/machine-learning.

6. Wolfewicz A. How do machines learn? A beginners guide. Levity.ai. Published November 16, 2022. Accessed January 24, 2024. https://levity.ai/blog/how-do-machines-learn.

7. Macrometa - The hyper distributed cloud for real-time results. Macrometa. Accessed January 24, 2024. https://www.macrometa.com/.

8. Perna G, Turner BEW. Dr. ChapGPT: A guide to generative AI in healthcare. Modern Healthcare. Published May 15, 2023. Accessed January 24, 2024. https://www.modernhealthcare.com/digital-health/chatgpt-healthcare-everything-to-know-generative-ai-artificial-intelligence.

9. Padoan A, Plebani M. Flowing through laboratory clinical data: the role of artificial intelligence and big data. Clin Chem Lab Med. 2022;60(12):1875-1880. doi:10.1515/cclm-2022-0653.

10. Eastwood B. How to navigate structured and unstructured data as a healthcare organization. Publisher. Published May 8, 2023. Accessed January 24, 2024. https://healthtechmagazine.net/article/2023/05/structured-vs-unstructured-data-in-healthcare-perfcon.

11. Miclaus K. Top 3 barriers to entry for AI in health care. Dataiku.com. Published July 29, 2022. Accessed January 24, 2024. https://blog.dataiku.com/top-3-barriers-ai-health-care.

12. Melchionna M. ML Model Detects Patients at Risk of Postoperative Issues. Healthitanalytics.com. Published July 7, 2023. Accessed January 24, 2024. https://healthitanalytics.com/news/ml-model-detects-patients-at-risk-of-postoperative-issues.

13. Smartwatch data used to predict clinical test results. National Institute of Biomedical Imaging and Bioengineering. Published November 30, 2021. Accessed January 24, 2024. https://www.nibib.nih.gov/news-events/newsroom/smartwatch-data-used-predict-clinical-test-results-4.

14. Harris JE. An AI-Enhanced Electronic Health Record Could Boost Primary Care Productivity. JAMA. 2023;5;330(9):801-802. doi:10.1001/jama.2023.14525.

15. Visibelli A, Roncaglia B, Spiga O, Santucci A. The impact of artificial Intelligence in the odyssey of rare diseases. Biomedicines. 2023;11(3):887. doi:10.3390/biomedicines11030887.

16. Melchionna M. AI Tool Provides Profile of Brain Cancer Genome During Surgery. Healthitanalytics.com. Published July 11, 2023. Accessed January 24, 2024. https://healthitanalytics.com/news/ai-tool-provides-profile-of-brain-cancer-genome-during-surgery.

17. PricewaterhouseCoopers. What doctor? Why AI and robotics will define new health. PwC. Accessed January 24, 2024. https://www.pwc.com/gx/en/news-room/docs/what-doctor-why-ai-and-robotics-will-define-new-health.pdf.

18. A status report on AI in laboratory medicine. Myadlm.org. Published January 1, 2023. Accessed January 24, 2024. https://www.myadlm.org/cln/articles/2023/janfeb/a-status-report-on-ai-in-laboratory-medicine.

19. Newton E. How can clinical labs benefit from machine learning and AI? clinicallab. Published October 10, 2022. Accessed January 24, 2024. https://www.clinicallab.com/how-can-clinical-labs-benefit-from-machine-learning-and-ai-26775.

20. Artificial intelligence in pathology. College of American Pathologists. Published April 16, 2020. Accessed January 24, 2024. https://www.cap.org/member-resources/councils-committees/informatics-committee/artificial-intelligence-pathology-resources.

21. Da Vinci robotic surgery. Upstate.edu. Accessed January 31, 2024. https://www.upstate.edu/community/services/surgery/davinci.php.

22. Online master of science in robotic surgery. AdventHealth University. Accessed January 23, 2024. https://www.ahu.edu/programs/online-master-of-science-robotic-surgery.

23. Laksham KB. Unmanned aerial vehicle (drones) in public health: A SWOT analysis. J Family Med Prim Care. 2019;8(2):342-346. doi:10.4103/jfmpc.jfmpc_413_18.

24. Sallam M. ChatGPT utility in healthcare education, research, and practice: Systematic review on the promising perspectives and valid concerns. Healthcare (Basel). 2023;11(6):887. doi:10.3390/healthcare11060887.

25. Past AI100 Officers. Gathering strength, gathering storms: The one hundred year study on artificial intelligence (AI100) 2021 study panel report. One Hundred Year Study on Artificial Intelligence (AI100). Accessed January 24, 2024. https://ai100.stanford.edu/gathering-strength-gathering-storms-one-hundred-year-study-artificial-intelligence-ai100-2021-study.

26. Lutkevich B. Will AI replace jobs? 9 job types that might be affected. WhatIs. Published November 3, 2023. Accessed January 24, 2024. https://www.techtarget.com/whatis/feature/Will-AI-replace-jobs-9-job-types-that-might-be-affected.

27. Mcgregor S. When AI systems fail: Introducing the AI incident database. Partnership on AI. Published November 18, 2020. Accessed January 24, 2024. https://partnershiponai.org/aiincidentdatabase/.

28. PricewaterhouseCoopers. No longer science fiction, AI and robotics are transforming healthcare. PwC. Accessed January 24, 2024. https://www.pwc.com/gx/en/industries/healthcare/publications/ai-robotics-new-health/transforming-healthcare.html.

To take the March CE test online go HERE. For more information, visit the Continuing Education tab.

About the Author

Anthony Kurec, MS, MASCP, MLT, H(ASCP)DLM

is Clinical Associate Professor, Emeritus, at SUNY Upstate Medical University in Syracuse, NY. He is also a member of the MLO Editorial Advisory Board.